Third-party code is a plague infecting the internet. Some 93% of pages include at least one third-party resource, according to the 2019 Web Almanac’s research. If you’re running a website in 2020, chances are you have at least one third-party JavaScript file being loaded on it. Third-party code is one of the single greatest contributors (if not the greatest) to website performance degradation. Linking to JavaScript (and other types of resources), while convenient, comes with a cost: slower websites. Slower sites yield a poorer user experience and a poorer user experience means lower conversion rates, higher abandonment, etc. In short, user experience matters. Still not convinced? Starting in 2021, user experience is going to be important to your SEO rankings on Google. The following guide outlines exactly how JavaScript impacts performance and what you can do to limit the damage.

Why does 3rd party JavaScript impact performance so much?

Render Blocking

If you’ve used Google’s PageSpeed Insights or Lighthouse performance tools, you’ve probably seen a warning about render blocking resources like the following:”

“This rule triggers when PageSpeed Insights detects that your HTML references a blocking external JavaScript file in the above-the-fold portion of your page.” – Google

External JavaScript files are render blocking resources. Here’s a rundown of what this means:

When browsers are parsing the markup of a page and encounter a piece of JavaScript, it has to stop and execute said script before continuing with parsing the page. Rendering of the page is blocked while this happens. If the scripts(s) in question are coming from a 3rd party, this effect is doubly bad because the parser has to first wait until the resource is downloaded before it can execute and then carry on building the DOM.

Only when all scripts encountered have been downloaded and executed, and the entire contents of the HTML have been parsed, will the page render. Users cannot interact with an empty page, so rendering as quickly as possible is critical to user experience. The good news is that we can do quite a bit to limit the render blocking effects. More on this later.

Scripts all the way down

Another major reason that 3rd party JavaScript impacts performance so greatly is that external scripts often load other JavaScript onto the page. For example, some popular services might have a tiny JavaScript embed that, on its surface, doesn’t seem so bad in terms of kilobytes, but really it is only that small because it is loading the full payload of the library using document.write(). This technique simply writes more scripts to your page after the initial script is run.

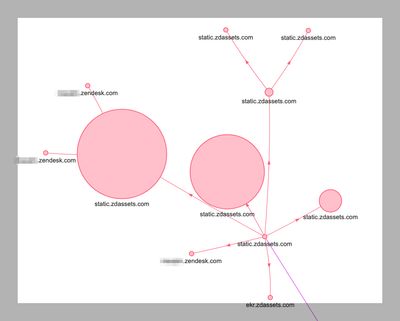

This technique is clever, but in the end the rendering of the page is still blocked. Even worse, using document.write() may be disallowed entirely by the browser. The image below includes a request map illustrating what this technique looks like:

We can see that ZenDesk, a popular support tool, initially loads up a tiny bit of JS on the main application (small circle bottom right), but quickly loads up other, much larger assets (bigger circles).

Worse still, sometimes these scripts load dependencies that the main application is already serving. We have seen 3rd party chat and review services load the entire jQuery library onto the page, even though the main application was already doing so! Often, these dependencies aren’t even optional. We like to avoid using jQuery where possible, but loading it twice is just terrible for performance.

How To Limit Third Party Influence on Performance

Audit 3rd party JavaScript

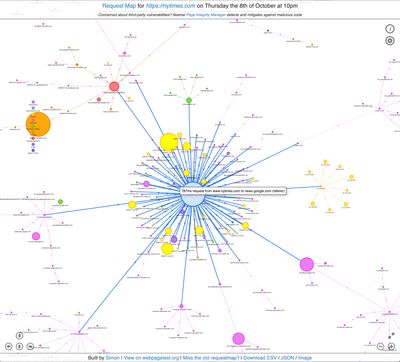

The first, and most obvious way to limit 3rd party JavaScript’s impact on performance is to not load it in the first place. Eliminate that which isn’t necessary. Start by auditing every piece of JavaScript on your site. Just about every site we encounter has some bit of 3rd party technical debt that can be eliminated. For scripts that must stay, try to avoid loading JavaScript files globally, i.e. loading them across the entire website when they might only be needed on a single page or subset of pages. One tool that we have found particularly useful for this purpose is the Request Map Generator tool, by Simon Hearne. It will create a map of all of the requests your pages are making, and visually illustrate their potential impact. It’s a great tool for visualizing what your key pages are doing. For example, here is the current New York Times homepage, which loads way too much JavaScript:

Async & Defer

Modern browsers allow us to leverage two attributes that can help limit the impact of external JavaScript: async and defer. As mentioned above, scripts are render blocking, and thus problematic for performance. Async and defer allow us to limit that render blocking effect in different ways. First, a visualization of how the browser behaves when encountering scripts that do not use async or defer:

Without async or defer, when encountering a script, the parser must download and execute said script before continuing on parsing the DOM.

async

Short for “asynchronous”, adding this attribute to script declarations essentially tells the browser to load scripts in the background, avoiding render blocking. When the script is ready, the browser is free to execute the file. If the page contains multiple scripts with the async attribute, they can be downloaded however the parser deems necessary and their execution will occur as soon as they are downloaded. Use async when the file in question doesn’t have dependencies, such as the DOM being fully parsed, or reliance on another library being loaded.

With async, the parser can simultaneously parse the DOM and download the script. Execution still happens when downloading is complete, then the parser carries on with the rest of the DOM.

defer

With the defer attribute, the browser still downloads the script asynchronously, but its execution is deferred until all parsing of the document is complete. A side-effect of waiting until the DOM is rendered before executing these scripts is that they are executed in the order in which they are declared. Use defer when the script in question either relies on a fully parsed DOM or its execution isn’t high-priority.

With defer, the parser continues parsing while downloading the script in question. Once the DOM is fully parsed, the script executes.

For deeper understanding on async vs defer, be sure to visit Ire Aderinokun’s guide. It helped tremendously with my own understanding and inspired the animations I created above to help visualize their differences.

Prioritize critical assets via preloading and resource hinting

Using “preloading” is another great way to improve overall performance of your website. While it is most common for critical assets like webfonts (that are often blocked until CSS has completed) and images, this technique can be useful in loading critical JS.

When your site has a snippet of JS that needs to be loaded sooner rather than later, preloading and resource hinting can be a nice win.

<link rel="preload" as="script" href="critical.js">By adding a <link> element to the <head> of the document, we can inform the browser that we’d like to prioritize the loading of critical.js and we also help the parser by preemptively “hinting” at its type with as="script".

This type of prioritization can help with overall performance, but also with newer Lighthouse metrics like Cumulative Layout Shift, especially if your JavaScript is altering the DOM with element insertions, or if your web fonts are loading very late in the chain.

You can read more about preloading critical path requests on Google’s Web.dev blog.

Self host where possible

Another option to help limit the impact of third party JS is to self host it. When you must load some third party code, there is usually nothing stopping you from hosting it yourself. Doing so avoids the network round trips necessary to retrieve and load a file from another server. Coupled with async or defer, this can really help with those key Lighthouse metrics.

Cautiously use a tag manager like Google Tag Manager

Tag managers, like Google Tag Manager are commonplace for teams with many marketing, tracking needs. They allow marketers to deploy marketing tags (scripts, pixels, etc.) without needing developer intervention. Essentially, it allows marketers to control which scripts are loaded and when, which user events trigger them, etc., all without needing a developer to code around all of those conditions. In theory, they make a lot of sense. In practice, they are often abused while competing stakeholders run amok loading scripts upon scripts because it's so easy to accomplish. While GTM loads scripts asynchronously, if your website is loading dozens of scripts and multiple megabytes of data, your performance is still going to be hosed. We recommend that teams use tag managers cautiously and routinely audit what is being loaded on key pages.

Wrapping up

The best way to avoid the performance impacts of JavaScript is to not load it in the first place. When you must, use every tool at your disposal to limit its impact. As the browsers continue to evolve and improve, we’ll be sure to update this post with the latest and greatest.